Benny Tritsch

29 Mar 2019

Benny Tritsch

29 Mar 2019

A common question asked by my customers is if graphics processing units (GPUs) really improve remote end-user experience in virtual desktop environments. The answer is yes, they do in many use cases and for both on-premises and cloud setups. One reason is that the Central Processing Unit (CPU) load on a remoting host can be reduced significantly by offloading graphics calculations used in many modern Windows applications to a GPU. Another reason is that GPUs can do video encoding and decoding much more efficient than any CPU. Simply said, offloading graphics calculations and video codecs to a GPU improves remote end-user experience. This article is an introduction into how GPUs work and why this is beneficial for remoting environments.

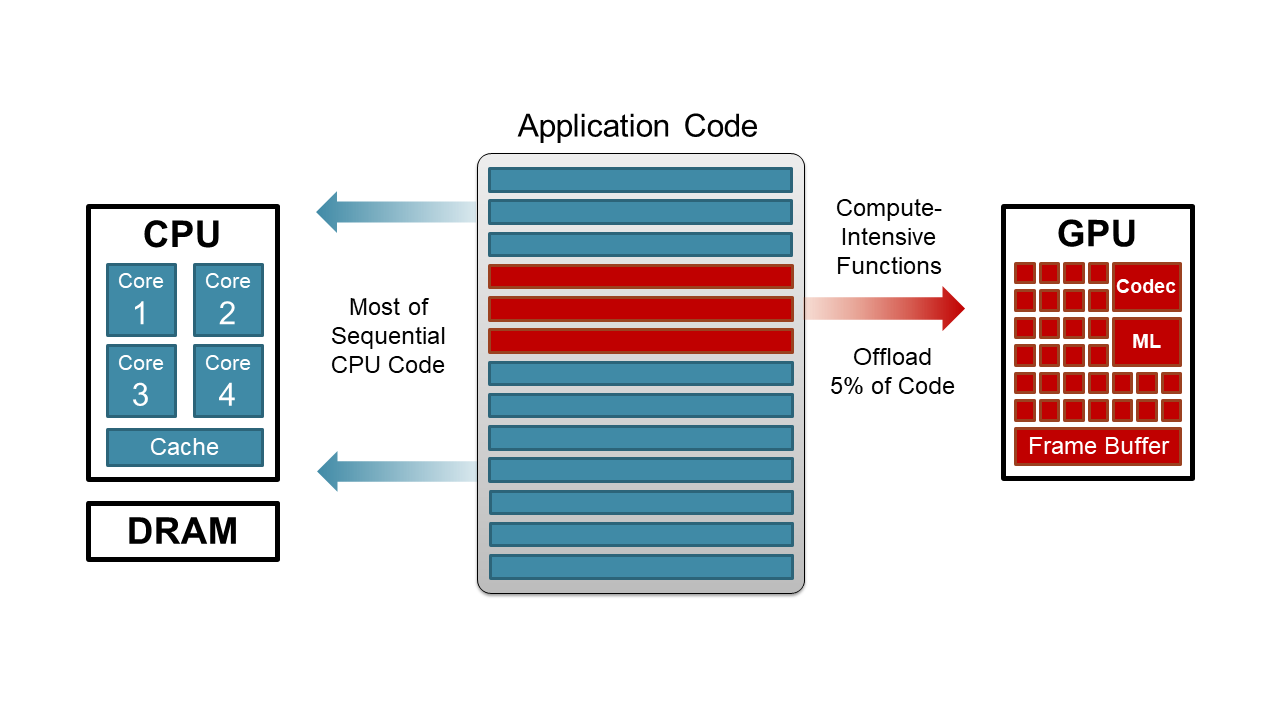

Before looking at GPUs, it’s important to understand what a CPU does. In a nutshell, a CPU is responsible of a computer system by orchestrating almost all components. It manages the system’s activities - like component communication, disk queue, and memory retrieval - and it executes instructions received from a computer program. A CPU has a certain number of cores (units that process instructions at the same time). Each CPU core is made up of a control unit, some arithmetic logic units (ALUs), and some memory (cache for short term storage, DRAM for long term storage).

The CPU's control unit retrieves data from memory. The ALU performs arithmetic and logical operations on the data, and the control unit moves the results back in to memory. The ALU is multifunctional in that it is capable of performing a large set of arithmetic and logical operations. On a multi-core processor, each core has a certain number of ALUs and each ALU can execute one calculation at a time. So, if a processor has 8 cores, with 8 ALUs per core, it can execute 64 instructions within a clock cycle. In this context the system clock speed is an important number as it defines the number of cycles a CPU executes per second, measured in GHz (gigahertz). In simple words, a cycle is a single increment of the CPU clock during which the smallest unit of processor activity is carried out.

A GPU, on the other hand, is much more specialized. It's primary job is to rapidly manipulate and alter computer graphics elements. The goal is to accelerate the creation of images in a video memory intended for output to a display device. The graphics chips you find on modern GPUs include hundreds or even thousands of very simple processing units called shaders (or "cores"). Their highly parallel structure makes GPUs very efficient for a well-defined set of graphics calculations they can do extremely fast and with relatively low energy consumption.

A good example is the graphics calculating and coloring of individual pixels in complex 2D or 3D scenes which is called rasterizing or rendering. The pixel data is temporarily stored in a special graphics memory called the frame buffer. The frame buffer represents the physical pixels on one or more computer screens. As soon as the entire scene in the frame buffer is complete, a pixel stream of the rendered scene is sent to the screen. The update frequency in the frame buffer or on the screen is measured in frames per second (FPS). The scene refresh cycle can range from once every couple of seconds to hundreds of times per second, depending on complexity and dynamic of the scene and depending on screen resolution. In a GPU-accelerated system, the CPU is the orchestrator or broker. When it gets some instructions pertaining to graphics, the CPU is constantly offloading graphics calculations to hundreds or thousands of shaders on a dedicated GPU.

In a virtual desktop environment, the pixel stream doesn’t go to a physical monitor. Instead the pixel stream is encoded into a video stream and gets redirected to the remoting client. Most modern GPUs include helper units for video encode and decode (codec). This allows for effective hardware-accelerated encoding or decoding of MP4 videos using the H.264/H.265 family of algorithms, also known as MPEG-4 AVC (Advanced Video Coding). This is not only beneficial when playing back videos on a Windows desktop, but also for encoding the remoting protocol data stream. All modern versions of remoting protocols are based on H.264/H.265 streams for the screen content redirection.

A CPU can be saturated easily by high definition video streams. Multiuser environments benefit from the fact that the CPU can offload video encoding and graphics rendering to the GPU, reducing the CPU load massively and thereby giving user sessions additional CPU resources for other jobs. This can really enhance the remote end-user experience.

Applications built on top of graphics application programming interfaces (APIs) tell the shaders what to do. In today’s Windows world, DirectX and OpenGL are the most popular 3D graphics APIs. They were both designed to offload their graphics instructions to a graphics processor if an adequate graphics card and corresponding driver is present. Computer games and CAD/CAM applications are common applications that use graphics APIs, but they are no longer the only ones – graphics API usage has become mainstream for thousands of Windows applications like the Window Desktop Manager (yes, the graphical shell), Microsoft Office, Microsoft Edge and Google Chrome. This is why Windows performance is massively improved when a GPU is present. In addition, some DirectX and most OpenGL commands will simply not work if no physical GPU is present to do the rendering.

If you want to comment, please do so through Twitter, using my handle @drtritsch.